Caching requests

Browser caching

The Cache-Control: header supersedes previous caching headers (e.g. Expires).

Modern browsers support Cache-Control. This is all we need.

Here is an example of how to use Cache-Control::

url:

cache-example:

pattern: /$YAMLURL/path # Pick any pattern

handler: FileHandler # and handler

kwargs:

path: $YAMLPATH/path # Pass it any arguments

headers: # Define HTTP headers

Cache-Control: max-age=3600 # Keep page in browser cache for 1 hour (3600 seconds)

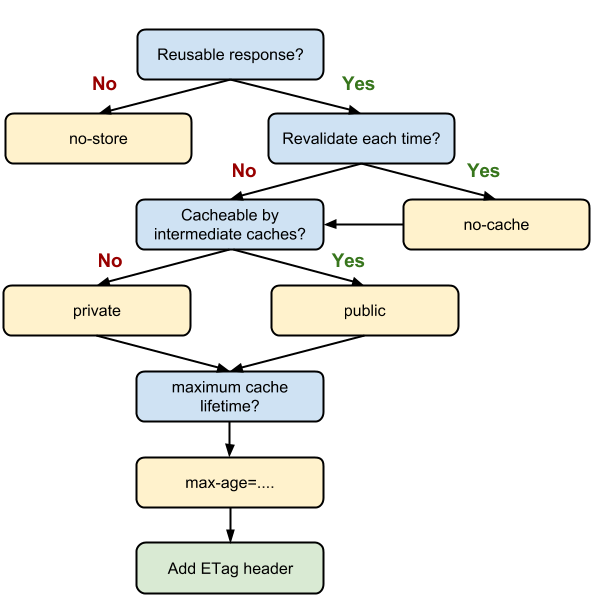

The cache is used by browsers as well as proxies. You can also specify these additional options:

no-store: Always check with the server. Always download the response again.no-cache: Always check with the server, but store result. Download if response has changed.private: Cache on browsers, not intermediate proxies. The data is sensitive.public: Cache even if the HTTP status code is an error, or if HTTP authentication is used.

Here are some typical Cache-Control headers. The durations given here are indicative. Change them based on your needs.

- External libraries: cache publicly for 10 years. They never change.

Cache-Control: "public, max-age=315360000" - Static files: cache publicly for a day. They change rarely.

Cache-Control: "public, max-age=86400" - Shared dashboards: cache publicly for an hour. Data refreshes slowly.

Cache-Control: "public, max-age=3600" - User dashboards: cache privately for an hour.

Cache-Control: "private, max-age=3600" - Real-time pages: Never cache this page anywhere.

Cache-Control: "no-cache, no-store"

To reload ignoring the cache, press

Ctrl-F5 on the browser. Below is a useful reference for cache-control checks (Google Dev Docs):

Server caching

The url: handlers accept a cache: key that defines caching behaviour. For

example, this configuration at random generates random letters every

time it is called:

random:

pattern: /$YAMLURL/random

handler: FunctionHandler

kwargs:

function: random.choice(['a', 'b', 'c', 'd', 'e', 'f', 'g', 'h', 'i', 'j'])

But adding the cache: to this URL caches it the first time it is called. When

random-cached is reloaded, the same letter is shown every time.

random-cached:

pattern: /$YAMLURL/random-cached

handler: FunctionHandler

kwargs:

function: random.choice(['a', 'b', 'c', 'd', 'e', 'f', 'g', 'h', 'i', 'j'])

cache: true

Cache keys

The response from any handler is cached against a cache key. By default, this is

the URL. But you can change this using the cache.key argument.

For example, cache-full-url?x=1 and cache-full-url?x=2 return different values because they cache the full URL. But cache-only-path?x=1 and cache-only-path?x=2 return the same value because they only cache the path.

cache-full-url:

pattern: /$YAMLURL/cache-full-url

handler: FunctionHandler

kwargs:

function: random.choice(['a', 'b', 'c', 'd', 'e', 'f', 'g', 'h', 'i', 'j'])

cache:

key: request.uri # This is the default cache key

cache-only-path:

pattern: /$YAMLURL/cache-only-path

handler: FunctionHandler

kwargs:

function: random.choice(['a', 'b', 'c', 'd', 'e', 'f', 'g', 'h', 'i', 'j'])

cache:

key: request.path # only use the request path, not arguments

The key can accept multiple values. The values can either be:

request.<attr>: For example,request.urireturns the URI request. Valid attributes are:request.uri: The default mechanism =request.path+request.urirequest.path: Same cache irrespective of query parametersrequest.query: Same cache irrespective of URL pathrequest.remote_ip: Different caches for each client IP addressrequest.protocol: Different caches for “http” vs “https”request.host: Different caches when serving on multiple domain namesrequest.method: Different caches for “GET” vs “POST”, etc

headers.<header>: This translates tohandler.request.headers[header]. For example,headers.Content-Typereturns theContent-Typeheader. The match is case-insensitive. Multiple values are joined by comma.args.<arg>: For example,args.xreturns the value of the?x=query parameter. Multiple values are joined by comma.cookies.<cookie>. This translates tohandler.request.cookies[cookie]. For example,cookies.userreturns the value of theusercookie.user.<attr>: This translates tohandler.current_user[attr]. For example,user.emailreturns the user’s email attribute if it is set.

For example, this configuration caches based on the request URI and user. Each URI is cached independently for each user ID.

cache-by-user-and-browser:

...

cache:

key: # Cache based on

- request.uri # the URL requested

- user.id # and handler.current_user['id'] if it exists

Google, Facebook, Twitter and LDAP provide the user.id attribute. DB Auth

provides it if your user table has an id column. But you can use any other

attribute instead of id – e.g. user.email for Google, user.screen_name

for Twitter, etc.

Cache expiry

You can specify a expiry duration. For example cache-expiry caches the response for 5 seconds.

cache-expiry:

pattern: /$YAMLURL/cache-expiry

handler: FunctionHandler

kwargs:

function: random.choice(['a', 'b', 'c', 'd', 'e', 'f', 'g', 'h', 'i', 'j'])

cache:

expiry:

duration: 5 # Cache the request for 5 seconds

By default, the cache expires either after 10 years, or when the cache store runs out of space.

Cache status

By default, only requests that return a HTTP 200 or HTTP 304 status code are cached. You can cache other status codes via the status: configuration.

url:

cache-errors:

pattern: /$YAMLURL/cache-errors

...

cache:

status: [200, 404, 500] # Cache all of these HTTP responses

Cache stores

Gramex provides an in-memory cache, but you can define your own cache in the

root cache: section as follows:

cache:

small-in-memory-cache: # Define a name for the cache

type: memory # This is an in-memory cache

size: 100000 # Just allow 100K of data in the cache

big-disk-cache: # Define a name for the cache

type: disk # This is an on-disk cache

path: $YAMLPATH/.cache # Location of the disk cache directory

size: 1000000000 # Allow ~1GB of data in the cache

distributed-cache: # Define a name for the cache

type: redis # This is a redis cache

path: localhost:6379:0 # Connection string for Redis instance

size: 1000000000 # Allow ~1GB of data in the cache

Memory caches are the default. Gramex has a 500 MB in-memory cache called memory

based on cachetools. When the size

limit is reached, the least recently used items are discarded. This cache is

used by gramex.cache.open. To change its size, use:

cache:

memory: # This is the name of the pre-defined Gramex cache

type: memory # This is an in-memory cache

size: 5000000 # Just allow 5MB of data in the cache instead of 500 MB (default)

Disk caches are based on the diskcache library. When the size limit is reached, the oldest items are discarded. But disk caches are MUCH slower than memory caches, and defeat the purpose of data caching. Use this if your app is computation or query intensive, and you need to share the cache across different instances on the same server.

Redis cache allows multiple gramex instances to cache objects in a Redis server. This allows the same cache to be used across different servers.

Redis cache requires Redis 5.0 or later to be running. When the size limit is reached, the oldest items are discarded. (Note: the size limit is set for the Redis instance, not for a specific DB. So avoid using the same Redis instance for other apps.)

To use a different cache by default, specify a default: true against the cache. The last

cache with default: true is used as the default cache.

cache:

memory:

default: false # Don't use memory as the default cache

different-memory-cache:

type: memory

size: 1000000000 # Allow ~1GB of data in the cache

default: true

Note: Persistent caches like disk and redis pickle Python objects. Some objects (e.g.

Tornado templates) are not pickle-able. These caches do not cache such objects, ignoring them with

a log error. Use memory cache if you need to cache pickle-able objects.

Your functions can access these caches from cache object in gramex.service.

For example, the default in-memory Gramex cache is at

gramex.service.cache['memory']. The disk cache above is at

gramex.service.cache['big-disk-cache'].

The cache stores can be treated like a dictionary. They also support a .set()

method which accepts an expire= parameter. For example:

from gramex import service # Available only if Gramex is running

cache = service.cache['big-disk-cache']

cache['key'] = 'value'

cache['key'] # returns 'value'

del cache['key'] # clears the key

cache.set('key', 'value', expire=30) # key expires in 30 seconds

Cache static files

You can cache static files with both server and client side caching. For example,

to cache the node_modules and assets directories, use this configuration:

static_files:

pattern: /$YAMLURL/(node_modules/.*|assets/.*) # Map all static files

handler: FileHandler

kwargs:

path: $YAMLPATH/ # from under this directory

headers:

Cache-Control: "public, max-age=315360000" # Cache for 10 years on the browser

cache: true # Also cache on the server

To force a refresh, append ?v=xx where xx is a new number. (The use of ?v=

is arbitrary. You can use any query parameter instead of v.)

Data caching

gramex.cache.open

opens files and caches them unless they are changed. You can

use this to load any type of file. For example:

import gramex.cache

data = gramex.cache.open('data.csv', encoding='utf-8')

This loads data.csv using pd.read_csv('data.csv', encoding='utf-8'). The

next time this is called, if data.csv in unchanged, the cached results are

returned.

You can also specify that the file is a CSV file by explicitly passing a 2nd

parameter as 'csv'. For example:

data = gramex.cache.open('data.csv', 'csv', encoding='utf-8')

(v1.23 made the 2nd parameter optional. It was mandatory before then.)

The 2nd parameter can take the following values:

gramex.cache.open(path, 'text', ...)loads text files usingio.open. You can usetxtinstead oftextgramex.cache.open(path, 'json', ...)loads JSON files usingjson.loadgramex.cache.open(path, 'jsondata', ...)loads JSON files usingpd.read_jsongramex.cache.open(path, 'yaml', ...)loads YAML files usingyaml.loadgramex.cache.open(path, 'config', ...)loads YAML files, but also allows variable substitution, imports, and other config files features.gramex.cache.open(path, 'csv', ...)loads CSV files usingpd.read_csvgramex.cache.open(path, 'excel', ...)loads Excel files usingpd.read_excel. You can usexlsxorxlsinstead ofexcelgramex.cache.open(path, 'hdf', ...)loads HDF files usingpd.read_hdfgramex.cache.open(path, 'html', ...)loads HTML files usingpd.read_htmlgramex.cache.open(path, 'sas', ...)loads SAS files usingpd.read_sasgramex.cache.open(path, 'stata', ...)loads Stata files usingpd.read_statagramex.cache.open(path, 'table', ...)loads tabular text files usingpd.read_tablegramex.cache.open(path, 'parquet', ...)loads parquet files usingpd.read_parquet. Requires pyarrow or fastparquetgramex.cache.open(path, 'feather', ...)loads feather files usingpd.read_feather. Requires pyarrowgramex.cache.open(path, 'template', ...)loads text usingtornado.template.Templategramex.cache.open(path, 'md', ...)loads text usingmarkdown.markdown. You can usemarkdowninstead ofmd

The 2nd parameter can also be a function like function(path, **kwargs). For

example:

# Return file size if it has changed

file_size = gramex.cache.open('data.csv', lambda path: os.stat(path).st_size)

# Read Excel file. Keyword arguments are passed to pd.read_excel

data = gramex.cache.open('data.xlsx', pd.read_excel, sheet_name='Sheet1')

To transform the data after loading, you can use a transform= function. For

example:

# After loading, return len(data)

row_count = gramex.cache.open('data.csv', 'csv', transform=len)

# Return multiple calculations

def transform(data):

return {'count': len(data), 'groups': data.groupby('city')}

result = gramex.cache.open('data.csv', 'csv', transform=transform)

You can also pass a rel=True parameter if you want to specify the filename

relative to the current folder. For example, if D:/app/calc.py has this code:

conf = gramex.cache.open('config.yaml', 'yaml', rel=True)

… the config.yaml will be loaded from the same directory as the calling

file, D:/app/calc.py, that is from D:/app/config.yaml.

To simplify creating callback functions, use gramex.cache.opener. This converts

functions that accept a handle or string into functions that accept a filename.

gramex.cache.opener opens the file and returns the handle to the function.

For example, to read using pickle.load, use:

loader = gramex.cache.opener(pickle.load)

data = gramex.cache.open('data.pickle', loader)

To register your function permanently against an extension, add it to gramex.cache.open_callback.

For example, to load .pickle files, you can use:

gramex.cache.open_callback['pickle'] = gramex.cache.opener(pickle.load)

data = gramex.cache.open('data.pickle')

If your function accepts a string instead of a handle, add the read=True

parameter. This passes the results of reading the handle instead of the handle.

For example, to compute the MD5 hash of a file, use:

m = hashlib.md5

loader = gramex.cache.opener(m.update, read=True)

data = gramex.cache.open('template.txt', mode='rb', encoding=None, errors=None)

Query caching

gramex.cache.query

returns SQL queries as DataFrames and caches the results.

The next time it is called, the query re-runs only if required.

For example, take this slow query:

query = '''

SELECT sales.date, product.name, SUM(sales.value)

FROM product, sales

WHERE product.id = sales.product_id

GROUP BY (sales.date, product.name)

'''

If sales data is updated daily, we need not run this query unless the latest

date has changed. Then we can use:

data = gramex.cache.query(query, engine, state='SELECT MAX(date) FROM sales')

gramex.cache.query is just like pd.read_sql but with an additional

state= parameter. state can be a query – typically a fast running query. If

running the state query returns a different result, the original query is re-run.

state can also be a function. For example, if a local file called .updated is

changed every time the data is loaded, you can use:

data = gramex.cache.query(query, engine, state=lambda: os.stat('.updated').st_mtime)

Module caching

The Python import statement loads a module only once. If it has been loaded, it

does not reload it.

During development, this means that you need to restart Gramex every time you change a Python file.

You can reload the module using importlib.reload(module_name), but this

reloads them module every time, even if nothing has changed. If the module has

any large calculations, this slows things down.

Instead, use gramex.cache.reload_module(module_name).

This is like importlib.reload, but it reloads only if the file has changed.

For example, you can use it in a FunctionHandler:

import my_utils

import gramex.cache

def my_function_handler(handler):

# Code used during development -- reload module if source has changed

gramex.cache.reload_module(my_utils)

my_utils.method()

You can use it inside a template:

{% import my_utils %} {% import gramex.cache %} {% set

gramex.cache.reload_module(my_utils) %} (Now my_utils.method() will have the

latest saved code)

In both these cases, whenever my_utils.py is updated, the latest version will

be used to render the FunctionHandler or template.

Subprocess streaming

You can run an OS command asynchronously using

gramex.cache.Subprocess](https://gramener.com/gramex/guide/api/cache/#gramex.cache.Subprocess). Use

this instead of subprocess.Popen because the latter will block Gramex

until the command runs.

Basic usage:

@tornado.gen.coroutine

def function_handler(handler):

proc = gramex.cache.Subprocess(['python', '-V'])

out, err = yield proc.wait_for_exit()

# out contains stdout result. err contains stderr result

# proc.proc.returncode contains the return code

raise tornado.gen.Return('Python version is ' + err.decode('utf-8'))

out and err contain the stdout and stderr from running python -V as bytes.

All keyword arguments supported by subprocess.Popen are supported here.

Streaming is supported. This lets you read the contents of stdout and stderr while the program runs. Example:

@tornado.gen.coroutine

def function_handler(handler):

proc = gramex.cache.Subprocess(['flake8'],

stream_stdout=[handler.write],

buffer_size='line')

out, err = yield proc.wait_for_exit()

# out will be an empty byte string since stream_stdout is specified

This reads the output of flake8 line by line (since buffer_size='line') and

writes the output by calling handler.write. The returned value for out is an

empty string.

stream_stdout is a list of functions. You can provide any other method here.

For example:

out = []

proc = gramex.cache.Subprocess(['flake8'],

stream_stdout=[out.append],

buffer_size='line')

… will write the output line-by-line into the out list using out.append.

stream_stderr works the same was as stream_stdout but on stderr instead.